About BIRD

BIRD (BIg Bench for LaRge-scale Database Grounded Text-to-SQL Evaluation) represents a pioneering, cross-domain dataset that examines the impact of extensive database contents on text-to-SQL parsing. BIRD contains over 12,751 unique question-SQL pairs, 95 big databases with a total size of 33.4 GB. It also covers more than 37 professional domains, such as blockchain, hockey, healthcare and education, etc.

News

- Mar. 3, 2026: LiveSQLBench-Large-v1 is released! The industrial-scale counterpart featuring ~1K columns, ~54 tables each DB with 480 tasks, 10x schema complexity over LiveSQLBench-Base-Full-v1, ~84K avg prompt tokens for long-context challenge, and Business Rule Drift (external knowledge can change and even has inconsistency across releases) for live context-learning evaluation. See more at livesqlbench.ai.

- Mar. 6, 2026: We release the Data Intelligence Index, a comprehensive evaluation of frontier AI models and agents on data-centric intelligence, covering DB querying, BI analysis, DB application debugging, human-centric interaction, multi-modal querying, and more. If you'd like your benchmark or model to be included, please feel free to contact us!

- 🇧🇷 Feb. 8, 2026: Our paper BIRD-Interact has been accepted to ICLR 2026 (Oral)! See you in Rio 🇧🇷!

- 🌴 Nov. 30, 2025: Our paper SWE-SQL has been accepted to NeurIPS 2025 (main track)! You can find us in San Diego 🌴 🦞. Feel free to contact us if you’d like to chat: Patrick, Xiaolong Li.

-

Nov. 13, 2025:

Major Updates

1. Our team with the efforts of global engineers, experts, and students has completed comprehensive quality control for BIRD-SQL, releasing bird-sql-dev-1106, which is a cleaner development split. For new submissions using this updated split, please indicate this in your submission so we can mark it accordingly on the leaderboard. To better resolve ambiguity, we will open a new track of interactive setting as we did in BIRD-Interact and remove Oracle Evidence instead. Coming soon!

2. We have released Mini-Interact, an SQLite-based interactive benchmark with a self-contained environment that requires no Docker setup. This lightweight version enables fast development and testing of interactive intelligence for agentic data exploration with users. -

Oct. 9, 2025: We release our paper of BIRD-Interact, detailing how we built the dataset and our key findings on why interaction and communication are critical for frontier LLMs to become more reliable assistance in the DBA cycle.

Currently, GPT-5 (Med) only achieves 8.67% SR on

c-Interactand 17.00% SR ona-Interactof full tasks. Additionally, we will releaseMini-Interactwhich will be operated in sqlite, with our trained local and stable user simulator. It is currently undergoing alignment and function testing before release. If you think our work is helpful to you, we will feel grateful if you can star us, which is a strong motivation for boosting our open-source efforts! - Sep. 19, 2025: We’ve released BIRD23-train-filtered, a high-quality filtered subset of the BIRD train split (6,601/9,428 ≈70%) that works as a drop-in replacement for text-to-SQL finetuning; See the example finetuning usage in here.

- Sep. 10, 2025: We're excited to announce the release of the LiveSQLBench-Base-Full-V1 (600)! The first text-to-SQL benchmark covering all SQL spectrum with Hierarchical Knowlegde Base (HKB) and test cases. We provide two types of queries: normal query and colloquial queries for people to test according to their own needs. The flag model Gemini-2.5-pro can only achieve 28.67 in colloquial queries, and 35.67 in normal queries. The base-lite and base-full-v1 would be locked version for development of research methods. The detailed performance is in our website.

-

Aug. 26, 2025: We're excited to announce the release of the BIRD-Interact-Full (600) set! It's a tough one—the best LLMs are only achieving a 16.33% success rate, with just 10.0% on the

c-interactanda-interactportions. For more details, please visit our project website. We'll be sending the Ground Truth & Test cases to our mailing list this week. If you want early access, please send an email as instructed on the site for an automatic download. On another note, we've also released a SQLite version of LiveSQLBench-Lite for easier local research. The full LiveSQLBench-Base and -Large versions are coming soon! -

Jul. 15, 2025: LLMs always struggle with self-correction due to self-enhancement bias, a problem particularly pronounced in SQL generation where declarative syntax and concise logs would provide limited guidance. Our new work in

ACL Main, SHARE, mitigates this significantly by (1) converting SQL queries into procedural programming steps and (2) proposing a generalized pipeline through on-policy multi-agent methods for fine-tuning SLMs.

Models: BAM, SAM, LOM

Dataset: https://huggingface.co/datasets/birdsql/share-bam

Paper: https://huggingface.co/papers/2506.00391

Code: https://github.com/quge2023/SHARE - Jul. 10, 2025: We optimized and upload bird-mini-dev with 3 dialects to huggingface according to community. Thanks!! Please download and check!

- Jul. 10, 2025: We release the human performance scores on BIRD-CRITIC 1.0! It contains both the group with and without usage of GenAI Tools. The huge gap encourages more intelligent solutions to serve users in the real-world DB applications. Please check~~.

- Jun. 25, 2025: We document our procedures, findings, and training recipe for open-source LLM agents that resolve SQL debugging tasks in our paper SWE-SQL.

- Jun. 08, 2025: We have released bird-critic-1.0-postgresql (sql issues for single dialects). Check out the data in Hugging Face and the newest code in GitHub. It seems that bird-critic is a challenging reasoning tasks for text-to-SQL since all top-peforming models are reasoning-based models. Have fun! Thanks!

-

Jun 4, 2025: We release BIRD-Interact, a comprehensive interactive evaluation for text-to-SQL models. It contains conversational (

c-Interact) and agentic (a-Interact) interaction modes. Top results: o3-mini achieves 24.4% Success Rate onc-Interact, while Claude-3.7-Sonnet reaches 17.78% Success Rate ona-Interact. We also discovered Interaction-Time Scaling (ITS) - performance scales over extended interactions. Enjoy! -

May 29, 2025: We release LiveSQLBench, the first containmination-free text-to-SQL benchmark covering full SQL specturm featuring more advanced SQLs, annotated RDBs, structrued / unstructured knowledge base, test cases, etc.

First, we present

LiveSQLBench-Base-Litewith 270 tasks for trail. Even though Lite is the easiest version with the most clear conditions, the SOTA modelo3-minionly achieves 44.81% Success Rate. We will update full set and settings. You can preview and interact with data samples in our website. Stay tuned! -

May. 25, 2025: Please report issues when you find any more of BIRD-SQL 2023,

we will check and clean dev set (1534) for the last time during summer by considering all feedbacks.

Next week, we will start our new project

LiveSQLBench, the first one-stop containmination-free text-to-SQL benchmark covering full SQL specturm featuring more advanced SQLs, databases, hierarichal / unstructured knoweldge base, test cases. Also it supports multi-turn conversational and interactive evaluation via ourBIRD-Interact, which will be released together. - May 22, 2025: We update Single-Model Leaderboard, now the Self-Consistency column has 4 values: empty (no self consistency used); Few: 1-7 candidates used in majority vote; Many: 8-32 candidates; Scale: >32 candidates;

- Apr. 20, 2025: We have released bird-critic-1.0-open (600 tasks by 4 dialects). Check out the data in Hugging Face and the newest code in GitHub. The full set of PostgreSQL will be released 1 week later. It seems that bird-critic is a challenging reasoning tasks for text-to-SQL since all top-peforming models are reasoning-based models. Have fun! Thanks!

- Feb. 4, 2025: We've launched BIRD-Critic (a.k.a SWE-SQL), a brand new text-to-SQL benchmark that really digs into reasoning challenges! A lite version is ready for exploration. Full sets are coming soon! Feel free for any feedbacks! Your inputs and suggestions are much appreciated!

-

Nov. 26, 2024: Thanks the support of

BIRD-SQL 2023! Now we are pleased to share that the projectBIRD 2025has been started. It will contains 4-6 new benchmarks with each covering its special focus of professional databases and their knowledge in the wild applications. We will release the first benchmark by early Jan. Feel free to let us know your needs or suggestions for cooking new generations of Text-to-SQL challenges. Thanks! - Aug. 4, 2024: The Reward-based Valid Efficiency Score (R-VES) will be used as the efficiency metric for future test submissions. The rationale and formula for R-VES can be found in the Mini-Dev repository. You can check the legacy VES scores for previous submissions here.

- Jun. 30, 2024: Due to large requests of test submissions about mixed models (open-source + GPU-based closed source), we update the submission instructions to accelerate your waiting time. Please check it out!

- Jun. 30, 2024: If you are interested in code agent, please do not miss a SOTA code agent implementation by OpenDevin for BIRD Dev!

-

Jun. 27, 2024:

Excited to announce the release of our

BIRD Mini-Dev dataset

with 500 high-quality examples. This dataset includes

all BIRD keywords, with modifications for questions such

as the addition of

window function. We are the first to deliver it in not only SQLite, but also MySQL, and PostgreSQL. We include Soft-F1 and R-VES metrics to reduce bias. Don't miss thecolumn_meaning.jsonfile, preprocessed by TA-SQL. Available for dev and testing set. Check out our work here: Before Generation, Align it! A Novel and Effective Strategy for Mitigating Hallucinations in Text-to-SQL Generation (TA-SQL), appearing atACL 2024Findings. -

Apr. 27, 2024:

Due to large volume of requests, we now modify the

license of our data to

CC BY-SA 4.0. However, we will not take responsibility of any bad purposes by using our data. Since we develop this benchmark for research and healthy application only. - Mar 13, 2024: Please also take a look at our related work: Tapilot-Crossing, which is the first challenging and more realistic benchmark designed to evaluate Large Language Model (LLM) agents on interactive data analysis tasks. The code includes Python and Private Library. And it covers 6 common agent actions in evaluation.

-

Sept 25, 2023:

We have released a cleaner version of

dev set. Please download dev set again. We checked all cases of dev set and fixed all errors that we found. After cleaning, the ChatGPT (gpt-3.5-turbo) and GPT4 (gpt-4-32k) EX scores have improved to 42.24 (from 37.22) and 49.15 (from 46.35), respectively. Thanks for all feedbacks! -

Sept 21, 2023:

Our paper has been accepted by

NeurIPS 2023as aSpotlight!!! Thanks for all the efforts and suggestions of co-authors, anonymous reviewers, awesome researchers/users in github or emails. - July 17, 2023: We update newest results of GPT-4, Claude-2 and Palm-2.

-

July 14, 2023:

The data link has been updated, fixing the schema names

in the CSV files. Additionally, tied results caused by

order_by limit 1are now considered. Both SQL queries - with and without accounting for tied results - are valid at this time. - Jun 12, 2023: We are welcome to any suggestions and reported gold errors in help_wanted. Any of your help is appreciated!

- Jun 5, 2023: We open-sourced our Graphix-T5, a graph-aware semi-pretrained text-to-text PLM specifically designed to improve multi-hop reasoning for the complex text-to-SQL task.

- May 30, 2023: If you are interested in ICL, please check out our interesting work deep-thinking🤔. Generate 1000 models for 1000 people smoothly!

Surprise from BIRD

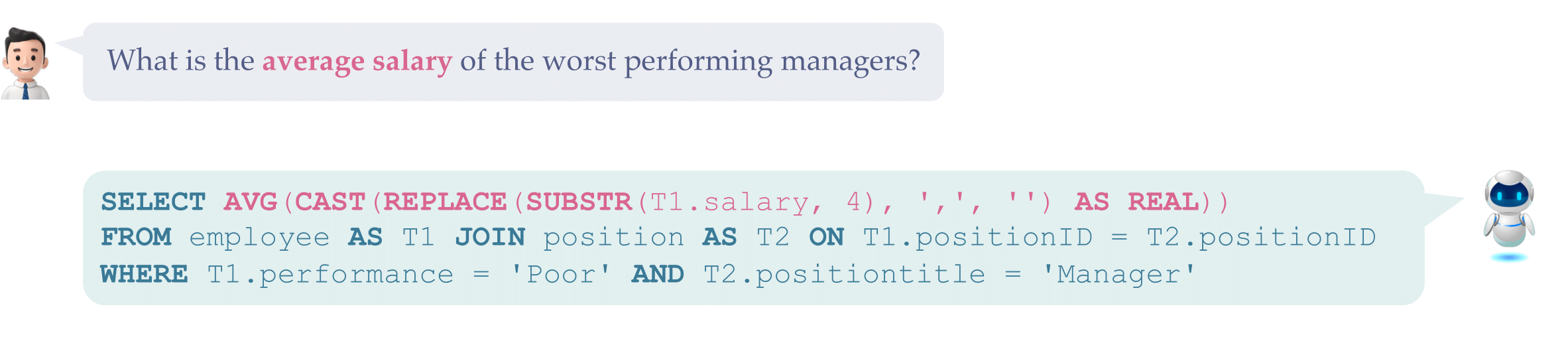

1. Large and Dirty values: Due to the nature of the real-world scenarios from which BIRD's database values were collected, they typically retain their original and frequently "dirty" format. Hence, text-to-SQL parsers must first analyze these values to account for their non-standard format before engaging in reasoning.

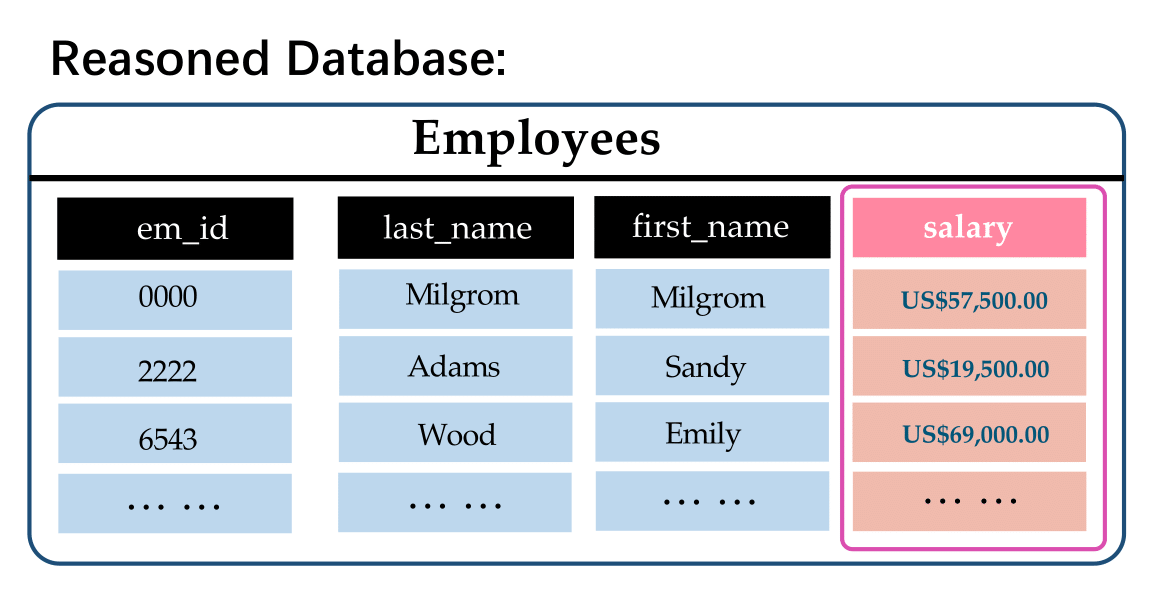

2. External Knowledge: "account.type = 'OWNER'" can be inferred by the knowledge evidence: "The condition of the loans require the account type should be the owner."

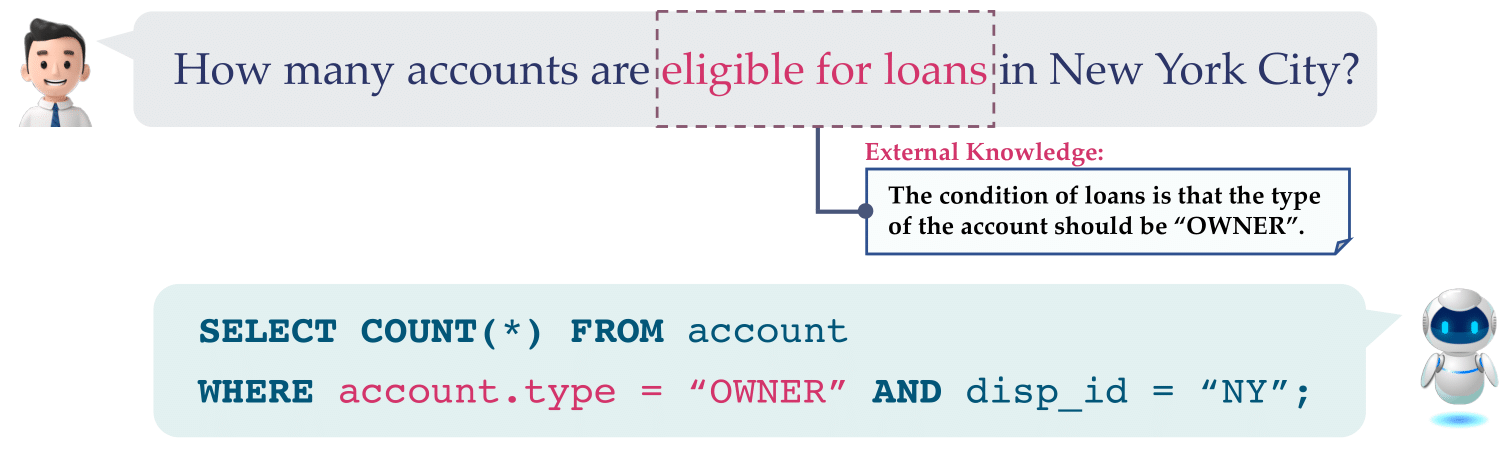

3. Text-to-Efficient-SQL: BIRD is the first text-to-SQL benchmark designed to encourage semantic parsers to produce SQL queries that are not only correct but also efficient. This emphasis on efficiency is especially valuable in real-world data / business analysis circumstances.

Submission

Please follow the Submission Guideline (below) and contact

bird.bench23@gmail.com for test evaluation.

Ususally, we will return your results in 10 days!

Subscribe to BIRD Update

Bird is a long-term research project aimed at bridging the gap between semantic parsing models and the success of database applications. To receive the latest updates of the dataset, you can leave your email address.

Citation

@article{li2024can,

title={Can llm already serve as a database interface? a big bench for large-scale database grounded text-to-sqls},

author={Li, Jinyang and Hui, Binyuan and Qu, Ge and Yang, Jiaxi and Li, Binhua and Li, Bowen and Wang, Bailin and Qin, Bowen and Geng, Ruiying and Huo, Nan and others},

journal={Advances in Neural Information Processing Systems},

volume={36},

year={2024}

}

| Model | Code | Size | Oracle Knowledge | Dev (%) | Test (%) | |

|---|---|---|---|---|---|---|

|

Human Performance Data Engineers + DB Students |

✔️ | 92.96 | ||||

| Dec 16, 2025 | AskData + GPT-4o AT&T CDO - DSAIR [Shkapenyuk et al. '25] |

UNK | ✔️ | 77.64 | 81.95 | |

| Sep 25, 2025 | Agentar-Scale-SQL Ant Group [Pengfei Wang et al. '25] |

[link] | UNK | ✔️ | 74.90 | 81.67 |

| July 14, 2025 | LongData-SQL LongShine AI Research |

UNK | ✔️ | 74.32 | 77.53 | |

| Jan 02, 2026 | Zhiwen-Lingsi-Agent China Telecom, TeleAI |

UNK | ✔️ | 73.53 | 76.63 | |

| Jan 26, 2026 | DeepEye-SQL Anonymous |

UNK | ✔️ | 73.53 | 76.58 | |

| Dec 4, 2025 | Q-SQL AWS-Quick Science [Ravi Shankar et al.'25] |

30B-3B-MoE | ✔️ | 72.99 | 76.47 | |

| Feb 6, 2026 | MIC2-SQL Hunan University |

UNK | ✔️ | 74.45 | 76.41 | |

| Jan 14, 2026 | SiriusAI-Text2SQL-Agent Tencent Data Computing Platform |

UNK | ✔️ | 74.64 | 76.30 | |

| Apr 16, 2025 | CHASE-SQL + Gemini Google Cloud [Pourreza et al. '24] |

UNK | ✔️ | 74.90 | 76.02 | |

| Feb 21, 2026 | RED-SQL South China Normal University |

30B | ✔️ | 74.19 | 75.91 | |

| Sep 22, 2025 | JoyDataAgent-SQL JD:CHO-JDT-JDL |

[link] | UNK | ✔️ | 74.25 | 75.85 |

| Oct 23, 2025 | Sinovatio-SQL Sinovatio AI Lab |

UNK | ✔️ | 73.72 | 75.80 | |

| May 30, 2025 | TCDataAgent-SQL Tencent Cloud |

UNK | ✔️ | 74.12 | 75.74 | |

| Feb 27, 2025 | Contextual-SQL Contextual AI |

[link] | UNK | ✔️ | 73.50 | 75.63 |

| Dec 17, 2024 | XiYan-SQL Alibaba Cloud [Yifu Liu et al. '24] |

[link] | UNK | ✔️ | 73.34 | 75.63 |

| Sep 1, 2025 | DB-SQL Anonymous |

UNK | ✔️ | 73.66 | 75.35 | |

| May 30, 2025 | CYAN-SQL Tencent Cloud / Fudan University |

UNK | ✔️ | 73.47 | 75.35 | |

| Jan 8, 2026 | MCR-SQL + Qwen2.5-Coder-32B-Instruct Anonymous |

32B | ✔️ | 72.88 | 75.29 | |

| Oct 20, 2025 | DeepEye-SQL Anonymous |

3B-MOE | ✔️ | 73.53 | 75.07 | |

| Oct 30, 2025 | Spektr-SQL Amazon Ads-SpektrBot [Ravi Shankar et al.] |

30B-3B-MoE | ✔️ | 72.10 | 74.85 | |

| Sep 10, 2025 | JT-SQLAgent CMCC, JIUTIAN Research |

32B | ✔️ | 73.60 | 74.06 | |

| May 27, 2025 | CSC-SQL + XiYanSQL-QwenCoder-32B-2412 Wuhan University of Technology + University of Science and Technology of China [Lei Sheng et al. '25] |

[link] | 32B | ✔️ | 71.33 | 73.67 |

| Oct 27, 2024 |

ExSL + granite-34b-code IBM Research AI |

34B | ✔️ | 72.43 | 73.17 | |

| Mar 31, 2025 | Reasoning-SQL 14B Google Cloud / Stanford [Pourreza et al. '25] |

14 B | ✔️ | 72.29 | 72.78 | |

| Aug 06, 2025 |

GenaSQL Gena Co. [Dönder et al. '25] |

[link] | UNK | ✔️ | 70.53 | 72.28 |

| Aug 21, 2024 | OpenSearch-SQL, v2 + GPT-4o Alibaba Cloud [Xiangjin Xie et al. '25] |

[link] | UNK | ✔️ | 69.30 | 72.28 |

| Mar 4, 2025 | OmniSQL-32B Renmin University of China + ByteDance Infra Lab [Li et al. '25] |

[link] | 32B | ✔️ | 69.23 | 72.05 |

| Jul 22, 2024 | Distillery + GPT-4o Distyl AI Research [Maamari et al. '24] |

UNK | ✔️ | 67.21 | 71.83 | |

| Sep 22, 2025 | Share + GPT-5 HKU [Qu et al. ACL Main'25] |

UNK | ✔️ | 65.45 | 71.83 | |

| May 27, 2025 | CSC-SQL + Qwen2.5-Coder-7B-Instruct Wuhan University of Technology + University of Science and Technology of China [Lei Sheng et al. '25] |

[link] | 7B | ✔️ | 69.19 | 71.72 |

| Jun 30, 2025 | LEAF-SQL JXUFE |

[link] | 14B | ✔️ | 69.43 | 71.60 |

| Apr 10, 2025 |

Queryosity Conrad Labs queryosity.io |

UNK | ✔️ | 69.40 | 71.16 | |

| May 21, 2024 |

CHESSIR +CG +UT Stanford [Talaei et al.'24] |

[link] | UNK | ✔️ | 68.31 | 71.10 |

| May 08, 2025 | Infly-RL-SQL-32B |

[link] | 32B | ✔️ | 70.08 | 70.60 |

| Aug 1, 2025 | SLM-SQL + Qwen2.5-Coder-1.5B-Instruct Wuhan University of Technology + University of Science and Technology of China [Lei Sheng et al. '25] |

[link] | 1.5B | ✔️ | 67.08 | 70.49 |

| Aug 28, 2024 | Insights AI Uber Freight |

UNK | ✔️ | 72.16 | 70.26 | |

| Feb 24, 2025 | Alpha-SQL + Qwen2.5-Coder-32B-Instruct HKUST(GZ) [Li et al. '25] |

[link] | 32B | ✔️ | 69.70 | 70.26 |

| Aug 30, 2024 | PURPLE + RED + GPT-4o Fudan University + Transwarp Technology |

UNK | ✔️ | 68.12 | 70.21 | |

| Feb 21, 2026 | Claude Opus 4.6 Baseline |

UNK | ✔️ | New Dev 68.77 |

70.15 |

|

| Nov 10, 2024 |

PB-SQL, GPT-4o Seoul National University |

UNK | ✔️ | 68.64 | 69.26 | |

| Jan 9, 2025 |

GSR Anonymous |

[link] | UNK | ✔️ | 66.88 | 69.26 |

| Dec 18, 2024 |

TC-SQL mindflow.ai |

UNK | ✔️ | 70.93 | 69.20 | |

| Jan 09, 2025 | XiYanSQL-QwenCoder-32B Alibaba Cloud [Yifu Liu et al. '24] |

[link] | 32B | ✔️ | 67.01 | 69.03 |

| Jul 14, 2024 |

RECAP + Gemini Google Cloud |

UNK | ✔️ | 66.95 | 69.03 | |

| Jul 2, 2024 |

ByteBrain ByteDance Infra Lab |

33B | ✔️ | 65.45 | 68.87 | |

| Nov 18, 2024 |

RSL-SQL + GPT-4o Anonymous [Cao et al.'24] |

[link] | UNK | ✔️ | 67.21 | 68.70 |

| Feb 21, 2026 | Qwen3-Coder-480B-A35B Baseline |

UNK | ✔️ | New Dev 66.17 |

68.14 |

|

| Mar 4, 2025 | OmniSQL-7B Renmin University of China + ByteDance Infra Lab [Li et al. '25] |

[link] | 7B | ✔️ | 69.04 | 67.97 |

| May 14, 2024 |

ExSL + granite-20b-code IBM Research AI |

20B | ✔️ | 65.38 | 67.86 | |

| Nov 11, 2024 | AskData + GPT-4o AT&T CDO - DSAIR [Shkapenyuk et al. '25] |

UNK | 65.91 | 67.41 | ||

| Feb 21, 2026 | Claude 4.5 Sonnet Baseline |

UNK | ✔️ | New Dev 67.34 |

66.85 |

|

| May 21, 2024 |

CHESSIR +SS +CG Stanford [Talaei et al.'24] |

[link] | UNK | ✔️ | 65.00 | 66.69 |

| Sep 23, 2024 |

E-SQL + GPT-4o Bilkent University [Caferoğlu et al.'24] |

[link] | UNK | ✔️ | 65.58 | 66.29 |

| Aug 29, 2024 | Arcwise + GPT-4o Arcwise |

UNK | ✔️ | 67.99 | 66.21 | |

| Sep 10, 2025 |

NucliOS MathCo |

UNK | ✔️ | 63.95 | 65.90 | |

| Apr 30, 2025 |

Command A Cohere AI [Team Cohere et al.'25] |

[link] | 111B | ✔️ | 63.49 | 65.68 |

| Nov 18, 2024 |

RSL-SQL + DeepSeek-v2 Anonymous [Cao et al.'24] |

[link] | UNK | ✔️ | 63.56 | 65.51 |

| Jan 14, 2024 |

MCS-SQL + GPT-4 Dunamu [Lee et al. '24] |

UNK | ✔️ | 63.36 | 65.45 | |

| Aug 20, 2024 | SCL-SQL Xffuture |

UNK | ✔️ | 64.73 | 65.23 | |

| Apr 08, 2024 |

OpenSearch-SQL,v1 + GPT-4 Alibaba Cloud |

UNK | ✔️ | 61.34 | 64.95 | |

| Jun 7, 2024 |

SFT CodeS-15B + SQLFixAgent Soochow University |

UNK | ✔️ | -- | 64.62 | |

| Aug 30, 2024 | PURPLE + GPT-4o Fudan University + Transwarp Technology |

UNK | ✔️ | 62.97 | 64.51 | |

| Oct 10, 2024 | MSL-SQL + DeepSeek-V2.5 Wuhan University of Technology |

236B | ✔️ | 66.82 | 64.00 | |

| Feb 6, 2025 | EBA-SQL + GPT-4 Northeastern University |

UNK | ✔️ | 64.60 | 63.50 | |

| Feb 21, 2024 |

Sense Anonymous |

13B | ✔️ | 55.48 | 63.39 | |

| Mar 11, 2025 |

OneSQL-v0.1-Qwen-32B onekq.ai |

[link] | 32B | ✔️ | 64.60 | 63.33 |

| Apr 10, 2024 |

GRA-SQL Tencent CDP-youpu |

UNK | ✔️ | 62.58 | 63.22 | |

| Feb 21, 2026 | GLM-4.7 Baseline |

UNK | ✔️ | New Dev 63.82 |

62.94 |

|

| Jun 1, 2024 |

SuperSQL HKUST(GZ) [Li et al. '24] |

[link] | UNK | ✔️ | 58.50 | 62.66 |

| Aug 1, 2025 | SLM-SQL + Qwen2.5-Coder-0.5B-Instruct Wuhan University of Technology + University of Science and Technology of China [Lei Sheng et al. '25] |

[link] | 0.5B | ✔️ | 56.87 | 61.82 |

| Mar 27, 2024 |

{Chat2Query} (GPT-4 + data entity modeling) (PingCAP) PingCAP |

[link] | UNK | ✔️ | 58.15 | 60.98 |

| Feb 21, 2026 | DeepSeek-R1 Baseline |

UNK | ✔️ | New Dev 61.67 |

60.93 |

|

| Nov 16, 2023 |

Dubo-SQL, v1 Mercator Technologies |

UNK | ✔️ | 59.71 | 60.71 | |

| Jan 30, 2026 |

Struct-SQL Crater Labs [Thaker et al. CAIAC'26] |

[link] | 4B | ✔️ | 54.63 | 60.42 |

| Oct 12, 2023 |

SFT CodeS-15B Renmin University of China [Li et al. SIGMOD'24] |

[link] | 15B | ✔️ | 58.47 | 60.37 |

| Feb 27, 2024 |

DTS-SQL + DeepSeek 7B University of Alberta [Pourreza et al. '24] |

[link] | 7B | ✔️ | 55.8 | 60.31 |

| Feb 21, 2026 | Kimi-K2-Thinking Baseline |

UNK | ✔️ | New Dev 60.63 |

59.87 |

|

| Sep 23, 2024 |

E-SQL + GPT-4o mini Bilkent University [Caferoğlu et al.'24] |

[link] | UNK | ✔️ | 61.60 | 59.81 |

| Nov 21, 2023 |

MAC-SQL + GPT-4 BUAA & Tencent [Wang et al. '23] |

UNK | ✔️ | 57.56 | 59.59 | |

| Oct 12, 2023 |

SFT CodeS-7B Renmin University of China [Li et al. SIGMOD'24] |

[link] | 7B | ✔️ | 57.17 | 59.25 |

| May 27, 2024 |

TA-SQL + GPT-4 HKU [Qu et al. ACL Findings'24] |

[link] | UNK | ✔️ | 56.19 | 59.14 |

| Nov 09, 2023 |

DAIL-SQL + GPT-4 Alibaba Group [Gao and Wang et al. VLDB'24] |

[link] | UNK | ✔️ | 54.76 | 57.41 |

| May 24, 2024 |

ExSL + granite-20b-code IBM Research AI |

20B | 51.69 | 57.13 | ||

| Aug 10, 2024 |

DeepSeek Baseline |

[link] | 236B | ✔️ | 56.13 | 56.68 |

| Aug 15, 2023 |

DIN-SQL + GPT-4 University of Alberta [Pourreza et al. '23] |

[link] | UNK | ✔️ | 50.72 | 55.90 |

| Aug 08, 2024 |

Mistral Baseline |

[link] | 123B | ✔️ | 53.52 | 55.84 |

| Jul 01, 2023 |

GPT-4 Baseline |

[link] | UNK | ✔️ | 46.35 | 54.89 |

| Nov 8, 2024 |

Interactive-T2S Anonymous |

UNK | 54.56 | 54.11 | ||

| Sep 19, 2024 |

Prem-1B-SQL Prem AI |

[link] | 1B | ✔️ | - | 51.54 |

| Jul 16, 2023 |

Claude-2 Baseline |

[link] | UNK | ✔️ | 42.70 | 49.02 |

| Nov 23, 2023 |

Open-SQL Anonymous |

7B | ✔️ | 37.68 | 47.74 | |

| Mar 17, 2023 |

ChatGPT + CoT HKU & DAMO [Li et al. NeurIPS'23] |

[link] | UNK | ✔️ | 36.64 | 40.08 |

| Mar 17, 2023 |

ChatGPT Baseline |

UNK | ✔️ | 37.22 | 39.30 | |

| Feb 17, 2023 |

Codex Baseline |

175B | ✔️ | 34.35 | 36.47 | |

| Jul 16, 2023 |

Palm-2 Baseline |

[link] | UNK | ✔️ | 27.38 | 33.04 |

| Mar 17, 2023 |

ChatGPT + CoT HKU & DAMO [Li et al. NeurIPS'23] |

[link] | UNK | 25.88 | 28.95 | |

| Mar 17, 2023 |

ChatGPT Baseline |

UNK | 24.05 | 26.77 | ||

| Feb 17, 2023 |

Codex Baseline |

175B | 25.42 | 24.86 | ||

| Feb 5, 2023 |

T5-3B Baseline |

3B | ✔️ | 23.34 | 24.05 | |

| Feb 3, 2023 |

T5-Large Baseline |

770M | ✔️ | 19.75 | 20.94 | |

| Feb 3, 2023 |

T5-Base Baseline |

220M | ✔️ | 11.54 | 12.89 | |

| Feb 5, 2023 |

T5-3B Baseline |

3B | 10.37 | 11.17 | ||

| Feb 3, 2023 |

T5-Large Baseline |

770M | 9.71 | 10.38 | ||

| Feb 3, 2023 |

T5-Base Baseline |

220M | 6.32 | 7.06 |

| Model | Code | Size | Oracle Knowledge | Test | |

|---|---|---|---|---|---|

| Human Performance Data Engineers + DB Students |

✔️ | 83.26 | |||

| Sep 25, 2025 | Agentar-Scale-SQL Ant Group [Pengfei Wang et al. '25] |

[link] | UNK | ✔️ | 77.00 |

| Dec 16, 2025 | AskData + GPT-4o AT&T CDO - DSAIR [Shkapenyuk et al. '25] |

UNK | ✔️ | 76.31 | |

| July 14, 2025 | LongData-SQL LongShine AI Research |

UNK | ✔️ | 71.89 | |

| Dec 17, 2024 | XiYan-SQL Alibaba Cloud [Yifu Liu et al. '24] |

[link] | UNK | ✔️ | 71.41 |

| Oct 27, 2024 |

ExSL + granite-34b-code IBM Research AI |

34B | ✔️ | 71.37 | |

| Feb 21, 2026 | RED-SQL South China Normal University |

30B | ✔️ | 71.18 | |

| Jan 26, 2026 | DeepEye-SQL Anonymous |

UNK | ✔️ | 70.43 | |

| Oct 23, 2025 | Sinovatio-SQL Sinovatio AI Lab |

UNK | ✔️ | 70.41 | |

| Sep 22, 2025 | JoyDataAgent-SQL JD:CHO-JDT-JDL |

UNK | ✔️ | 70.16 | |

| Feb 27, 2025 | Contextual-SQL Contextual AI |

UNK | ✔️ | 70.02 | |

| Apr 16, 2025 |

CHASE-SQL + Gemini Google Cloud [Pourreza et al. '24] |

UNK | ✔️ | 69.94 | |

| Jan 14, 2026 | SiriusAI-Text2SQL-Agent Tencent Data Computing Platform |

UNK | ✔️ | 69.77 | |

| Sep 1, 2025 | DB-SQL Anonymous |

UNK | ✔️ | 69.54 | |

| Aug 21, 2024 | OpenSearch-SQL, v2 + GPT-4o Alibaba Cloud |

UNK | ✔️ | 69.36 | |

| Oct 20, 2025 | DeepEye-SQL Anonymous |

3B-MOE | ✔️ | 68.82 | |

| Mar 31, 2025 | Reasoning-SQL 14B Google Cloud / Stanford [Pourreza et al. '25] |

14 B | ✔️ | 68.67 | |

| May 27, 2025 | CSC-SQL + XiYanSQL-QwenCoder-32B-2412 Wuhan University of Technology + University of Science and Technology of China [Lei Sheng et al. '25] |

[link] | 32B | ✔️ | 67.84 |

| May 27, 2025 | CSC-SQL + Qwen2.5-Coder-7B-Instruct Wuhan University of Technology + University of Science and Technology of China [Lei Sheng et al. '25] |

[link] | 7B | ✔️ | 67.47 |

| Jul 22, 2024 | Distillery + GPT-4o Distyl AI Research |

UNK | ✔️ | 67.41 | |

| Mar 4, 2025 | OmniSQL-32B Renmin University of China + ByteDance Infra Lab [Li et al. '25] |

[link] | 32B | ✔️ | 67.05 |

| May 21, 2024 | CHESSIR +CG +UT Stanford [Talaei et al.'24] |

[link] | UNK | ✔️ | 66.53 |

| Jul 5, 2024 | Insights AI Uber Freight |

UNK | ✔️ | 66.39 | |

| May 14, 2024 | ExSL + granite-20b-code IBM Research AI |

20B | ✔️ | 66.25 | |

| Jul 14, 2024 | RECAP + Gemini Google Cloud |

UNK | ✔️ | 65.70 | |

| Aug 30, 2024 | PURPLE + RED + GPT-4o Fudan University + Transwarp Technology |

UNK | ✔️ | 65.62 | |

| Aug 06, 2025 | GenaSQL Gena Co. gena.co |

UNK | ✔️ | 65.52 | |

| Aug 1, 2025 | SLM-SQL + Qwen2.5-Coder-1.5B-Instruct Wuhan University of Technology + University of Science and Technology of China [Lei Sheng et al. '25] |

[link] | 1.5B | ✔️ | 65.25 |

| Dec 18, 2024 | TC-SQL mindflow.ai |

UNK | ✔️ | 65.19 | |

| Mar 4, 2025 | OmniSQL-7B Renmin University of China + ByteDance Infra Lab [Li et al. '25] |

[link] | 7B | ✔️ | 65.04 |

| Apr 10, 2025 | Queryosity queryosity.io |

UNK | ✔️ | 64.73 | |

| Jan 9, 2025 | GSR Anonymous |

[link] | UNK | ✔️ | 64.41 |

| Jan 09, 2025 | XiYanSQL-QwenCoder-32B Alibaba Cloud [Yifu Liu et al. '24] |

[link] | 32B | ✔️ | 64.65 |

| Aug 29, 2024 | Arcwise + GPT-4o Arcwise |

UNK | ✔️ | 63.68 | |

| Nov 11, 2024 | AskData + GPT-4o AT&T CDO - DSAIR [Shkapenyuk et al. '25] |

UNK | 63.34 | ||

| May 21, 2024 | CHESSIR +SS +CG Stanford [Talaei et al.'24] |

[link] | UNK | ✔️ | 62.77 |

| Sep 23, 2024 | E-SQL + GPT-4o Bilkent University [Caferoğlu et al.'24] |

[link] | UNK | ✔️ | 62.43 |

| Jun 7, 2024 | SFT CodeS-15B + SQLFixAgent Soochow University |

UNK | ✔️ | 61.37 | |

| Aug 20, 2024 | SCL-SQL Xffuture |

UNK | ✔️ | 61.28 | |

| Jan 14, 2024 | MCS-SQL + GPT-4 Dunamu [Lee et al. '24] |

UNK | ✔️ | 61.23 | |

| Sep 10, 2025 | NucliOS MathCo |

UNK | ✔️ | 60.51 | Feb 27, 2024 | PB-SQL Seoul National University |

UNK | ✔️ | 60.36 |

| Aug 30, 2024 | PURPLE + GPT-4o Fudan University + Transwarp Technology |

UNK | ✔️ | 60.35 | |

| Feb 6, 2025 | EBA-SQL + GPT-4 Northeastern University |

UNK | ✔️ | 60.13 | |

| Mar 11, 2025 | OneSQL-v0.1-Qwen-32B onekq.ai |

[link] | 32B | ✔️ | 60.02 |

| Apr 30, 2025 |

Command A Cohere AI [Team Cohere et al.'25] |

[link] | 111B | ✔️ | 59.82 |

| Oct 10, 2024 | MSL-SQL + DeepSeek-V2.5 Wuhan University of Technology |

236B | ✔️ | 59.42 | |

| Nov 21, 2023 | MAC-SQL + GPT-4 BUAA & Tencent [Wang et al. '23] |

UNK | ✔️ | 57.60 | |

| Aug 1, 2025 | SLM-SQL + Qwen2.5-Coder-0.5B-Instruct Wuhan University of Technology + University of Science and Technology of China [Lei Sheng et al. '25] |

[link] | 0.5B | ✔️ | 57.11 |

| Oct 12, 2023 | SFT CodeS-15B Renmin University of China [Li et al. SIGMOD'24] |

[link] | 15B | ✔️ | 56.73 |

| Apr 10, 2024 | GRA-SQL Tencent CDP-youpu |

UNK | ✔️ | 56.63 | |

| Nov 16, 2023 | Dubo-SQL, v1 Mercator Technologies |

UNK | ✔️ | 56.63 | |

| May 24, 2024 | ExSL + granite-20b-code IBM Research AI |

20B | 56.11 | Mar 27, 2024 | {Chat2Query} (GPT-4 + data entity modeling) (PingCAP) PingCAP |

[link] | UNK | ✔️ | 56.06 |

| Oct 12, 2023 | SFT CodeS-7B Renmin University of China [Li et al. SIGMOD'24] |

[link] | 7B | ✔️ | 55.69 |

| Sep 23, 2024 | E-SQL + GPT-4o mini Bilkent University [Caferoğlu et al.'24] |

[link] | UNK | ✔️ | 55.64 |

| Nov 09, 2023 | DAIL-SQL + GPT-4 Alibaba Group [Gao and Wang et al. VLDB'24] |

[link] | UNK | ✔️ | 54.02 |

| Aug 10, 2024 | DeepSeek Baseline |

[link] | 236B | ✔️ | 53.25 |

| Aug 15, 2023 | DIN-SQL + GPT-4 University of Alberta [Pourreza et al. '23] |

[link] | UNK | ✔️ | 53.07 |

| Aug 08, 2024 | Mistral Baseline |

[link] | 128B | ✔️ | 52.59 |

| Jul 01, 2023 | GPT-4 Baseline |

[link] | UNK | ✔️ | 51.75 |

BIRD Mini-Dev

A Lite version of developtment dataset, which is designed to facilitate efficient and cost-effective development cycles, especially for testing and refining SQL query generation models. For more details, please visit the GitHub repository. For updating Leaderboard, please make sure your paper or resource is public available and submit a PR.

| Model | Code | Size | Oracle Knowledge | SQLite | MySQL | PostgreSQL | |

|---|---|---|---|---|---|---|---|

| Jun 31, 2024 |

TA + GPT-4 HKU [Qu et al. ACL Findings'24] |

[link] | UNK | ✔️ | 58.00 | 49.20 | 50.80 |

| Jun 31, 2024 | GPT-4 | UNK | ✔️ | 47.80 | 40.80 | 35.80 | |

| Jun 31, 2024 | GPT-4-32k | UNK | ✔️ | 47.00 | 43.20 | 35.00 | |

| Jun 31, 2024 | GPT-4-turbo | UNK | ✔️ | 45.80 | 41.00 | 36.00 | |

| Jun 30, 2026 |

Struct-SQL Crater Labs [Thaker et al. CAIAC'26] |

[link] | 4B | ✔️ | 45.00 | - | - |

| Jun 31, 2024 | Llama3-70b-instruct | 70B | ✔️ | 40.80 | 37.00 | 29.40 | |

| Jun 31, 2024 | GPT-35-turbo | UNK | ✔️ | 38.00 | 36.00 | 27.40 | |

| Jun 31, 2024 | GPT-35-turbo-instruct | UNK | ✔️ | 33.60 | 31.20 | 26.60 | |

| Jun 31, 2024 | Phi-3-medium-128k-instruct | 13B | ✔️ | 30.60 | 25.00 | 21.60 | |

| Jun 31, 2024 | Llama3-8b-instruct | 8B | ✔️ | 24.40 | 24.60 | 18.40 | |

| Jun 31, 2024 | Mixtral-8x7b | 46.7B | ✔️ | 21.60 | 13.60 | 12.40 |